article-context-nav context-nav article article-hero-lede article-hero post-contents Amazon Luna is Amazon’s cloud gaming platform that goes live for early access customers in October. The most interesting part is that Luna is a progressive web app for iOS. This approach lets Amazon circumvent Apple’s App Store fees. As a user, you’ll simply download the PWA from the...

Tag: entertainment

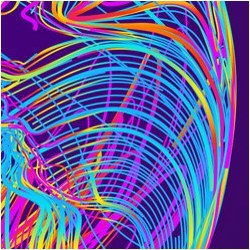

The Challenge of Crafting Intelligible Intelligence | June 2019 | Communications of the ACM

Modern AI approaches often work like black boxes: nobody really knows why things work the way they work. Offering explanations why an AI system came to a conclusion is certainly needed. The article by Daniel S. Weld and Gagan Bansal [1] studied two approaches that are promising: using an inherently interpretable model, or adopting an...