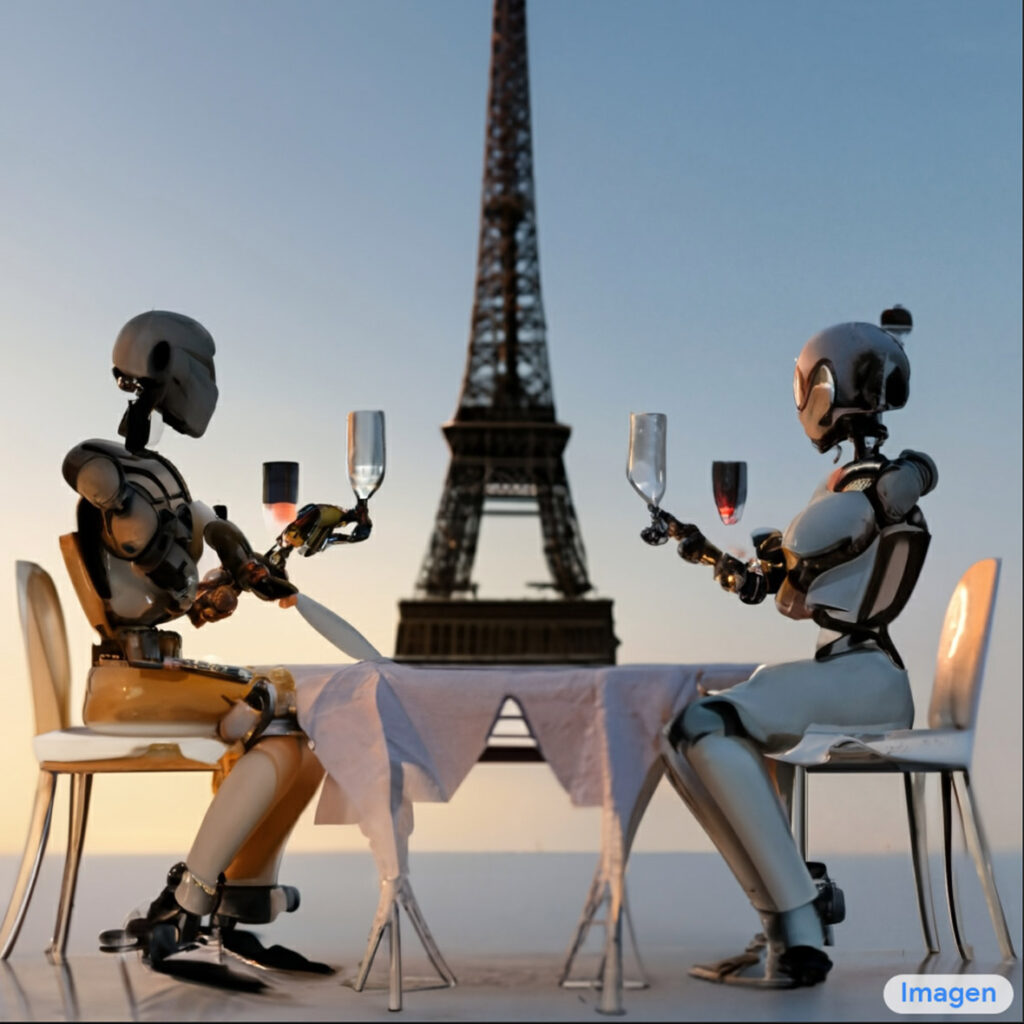

Text-to-image artificial intelligence programs have been around for a while now. However, a new AI program called Imagen, developed by Google, is able to generate realistic images based on textual descriptions, and the results are pretty impressive.

Imagen uses natural language text input to convert input text into embeddings. The text embedded in the image is then mapped using a conditional diffusion model. It can be used to create a small 64x64 picture. Imagen employs text-conditional superresolution diffusion models to increase the 64x64 image's resolution to 256x256 or 1024x1024.

Imagen's flexibility and results are significantly better than NVIDIA's GauGAN2 method last fall and the AI is rapidly improving. Check out the image below that was generated by the text 'a cute corgi who lives in a house built out of sushi. This house looks real, as if someone built it from sushi, which the corgi obviously loves.

Where are the people?

We can assume that users will start typing all kinds of phrases about people, no matter how innocent or maliciously. There will be many text inputs about cute animals in funny situations. But there would also be text about doctors, chefs, athletes, mothers, fathers, children, and other people. How would these people look? What would these people look like? Would doctors be mostly men, flight attendants mainly be women, and most people would have light skin?

Because Google has chosen not to reveal any information, we don't know what Imagen does with these type of inputs. Text-to-image research presents ethical issues. How good can a model present objective results if it can create any image from text? Imagen is an AI model that was trained largely using data from the internet. The internet's content is biased and distorted in ways we don't fully understand. These biases can have negative social impacts that are worth considering and, hopefully, rectifying. Google also used the LAION400M dataset for Imagen. This is known to contain 'a wide range of inappropriate contents including pornographic images, racist slurs and harmful social stereotypes. Although a subset of the training groups was filtered to remove noise or 'undesirable content, there is still a risk that Imagen may have encoded harmful stereotypes or representations.

Not for the public (yet?)

Due to the aforementioned ethical issues, Google has decided not to release Imagen for the public. Google's website allows you to click on certain words to view results. Also, the model is more likely to show images of people with lighter skin tones, and reinforce gender roles. Imagen's depiction and portrayal of certain events and items may reflect cultural biases, according to early research.

Google knows that representation issues are a problem across its large range of products. Although there are many skilled and thoughtful people working behind the scenes to generate AI models, once a model has been unleashed, it is pretty much on its own. It is difficult to predict what will happen if users type anything they like.

These biases and other issues are a problem for models that are trained with large datasets. As such, AI models can produce very harmful content. Many illustrators would be averse to the idea of illustrating or painting something so horrific that you'd turn your nose up at them. Text-to-image AI models aren't morally receptive and can produce any image. This is a problem and it is not clear how to fix it.

While AI research teams struggle with the moral and societal implications of their incredible work, you can still view eerily realistic images of skateboarding pandas.

Of course, there are still some limitations. For example, the program has trouble with concepts that are hard to visualize, like “love” or “justice.” And, of course, the images it creates are only as good as the text descriptions it’s given.

Still, it’s a pretty impressive accomplishment, and it’s likely that the program will only get better as it continues to be developed.

Source: DPReview

Images by Google

DIGITAL TRENDS

Digital trends that will impact your business

We monitor latest digital trends and assess their value for your online business.