Major AI labs, including OpenAI, are shifting their focus away from building ever-larger language models (LLMs). Instead, they are exploring "test-time compute", where models receive extra processing time during execution to produce better results. This change stems from the limitations of traditional pre-training methods, which have reached a plateau in performance and are becoming too...

Tag: LLMs

The Trend Towards Smaller Language Models in AI

The landscape of artificial intelligence (AI) is undergoing a notable transformation, shifting from the pursuit of ever-larger language models (LLMs) to the development of smaller, more efficient models. This shift, driven by technological advancements and practical considerations, is redefining how AI systems are built, deployed, and utilized across various sectors. The Shift in AI Model...

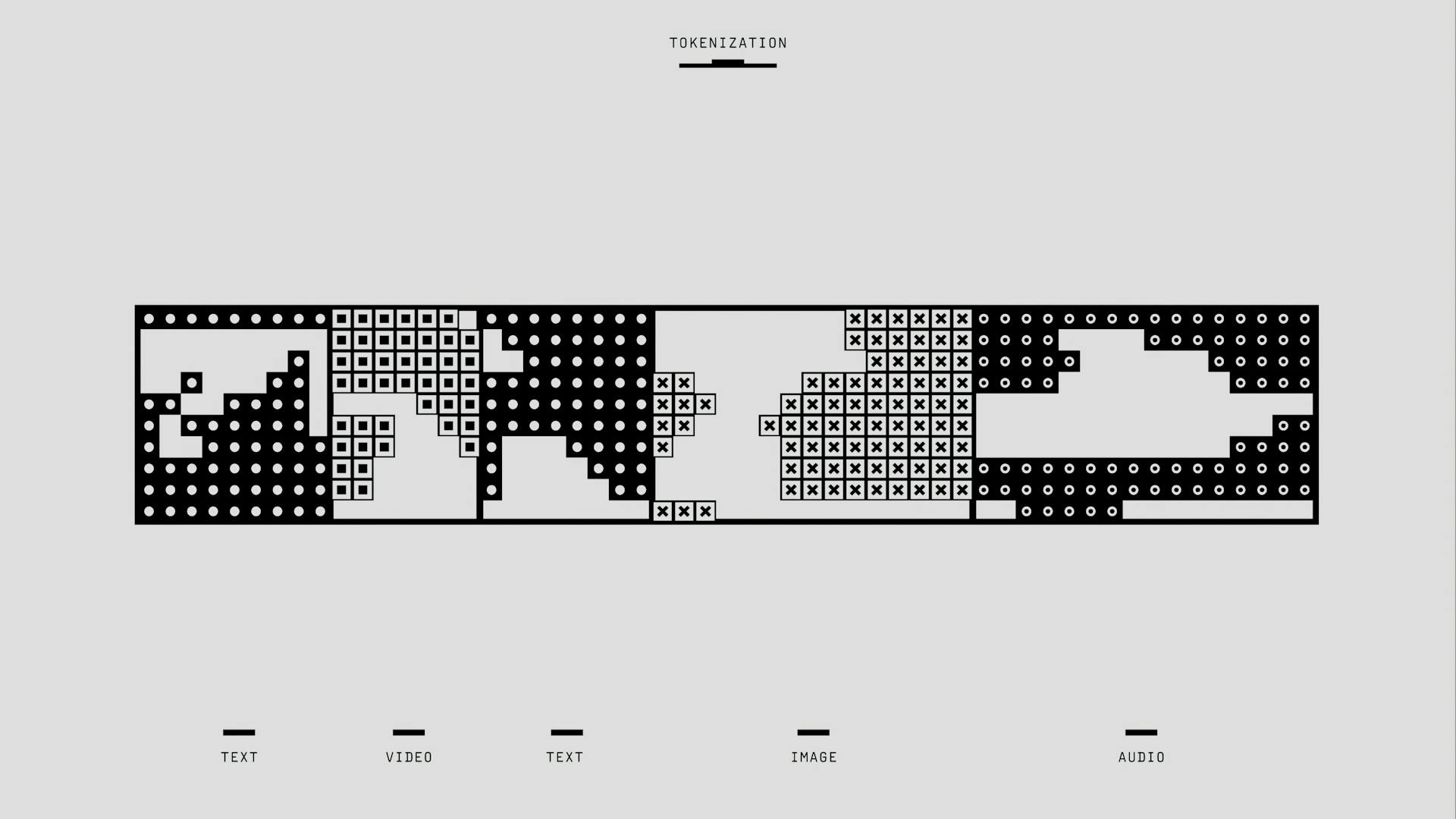

Understanding Tokens in AI

In the realm of Artificial Intelligence (AI), particularly in the context of Large Language Models (LLMs), tokens are the fundamental units of language that enable machines to comprehend and generate human-like text. This article delves into the world of tokens, exploring their definition, history, types, and significance in the AI landscape. What are Tokens? In...

FraudGPT and other malicious AIs are the new frontier of online threats. What can we do?

The internet, a vast and indispensable resource for modern society, has a darker side where malicious activities thrive. From identity theft to sophisticated malware attacks, cyber criminals keep coming up with new scam methods. Widely available generative artificial intelligence (AI) tools have now added a new layer of complexity to the cyber security landscape. Staying...

Embeddings: The Secret Sauce in AI’s Data Cuisine

In the world of artificial intelligence (AI), data is the main ingredient. But just like raw ingredients need preparation before they become a delicious meal, data must be transformed into a palatable format for AI systems, particularly large language models (LLMs), to "digest." This is where embeddings enter the kitchen, serving as the secret sauce...

Why Software Engineers Should Embrace Philosophy

In the world of software engineering, where lines of code and caffeine reign supreme, there’s an unlikely ally waiting in the wings: philosophy. Yes, you read that right. The ancient art of pondering existence, ethics, and the meaning of life can turbocharge your coding skills. Here’s why software engineers should dive into the realm of...

LoRA vs. Fine-Tuning LLMs

LoRA (Low-Rank Adaptation) and fine-tuning are two methods to adapt large language models (LLMs) to specific tasks or domains. LLMs are pre-trained on massive amounts of general domain data, such as GPT-3, RoBERTa, and DeBERTa, and have shown impressive performance on various natural language processing (NLP) tasks. Why fine tune a LLM? Fine-tuning of LLMs...

Super Apps vs. Large Language Models

In the rapidly evolving world of software, Super Apps and Large Language Models (LLMs) offer two distinct approaches to address user needs and preferences. Super Apps, like WeChat, consolidate various functions and services into a single platform, while LLMs, such as GPT from OpenAI promise a new era of software adaptability through self-modifying code generation....

Why Large Language Models will replace Apps

The dawn of large language models (LLMs) like GPT from OpenAI that can write their own code and adapt to novel requirements promises a more flexible approach to software development. In this article, we will explore in detail why apps as we know them will soon be a thing of the past and how LLMs...