AI hallucinations refer to instances where a model generates a confident response that sounds plausible but is factually incorrect or entirely fabricated . For example, an AI chatbot might cite a nonexistent legal case or invent a scientific-sounding explanation out of thin air. These aren’t intentional lies – they result from the way generative AI...

Tag: LLMs

The New Unit Test: How LLM Evals Are Redefining Quality Assurance

Picture this: you've written a function that calculates the square root of a number. Feed it the input 16, and you'll get 4 back every single time—guaranteed. This predictability is the bedrock of traditional software testing, where unit tests verify that each piece of code behaves exactly as expected with surgical precision. Now imagine a...

Why AI Keeps Making Stuff Up: The Real Reason Language Models Hallucinate

Picture this: You ask a state-of-the-art AI chatbot for someone's birthday, specifically requesting an answer only if it actually knows. The AI confidently responds with three different dates across three attempts—all wrong. The correct answer? None of the confident fabrications even landed in the right season. This isn't a glitch. According new research from OpenAI,...

AI Gets Its “I Know Kung Fu” Moment: Researchers Create Instant Expertise Downloads for Language Models

Remember that iconic scene in The Matrix where Neo gets martial arts expertise instantly downloaded into his brain, then opens his eyes and declares "I know kung fu"? Researchers have essentially created the AI equivalent of that moment. A team of scientists has developed Memory Decoder, a breakthrough technique that can instantly grant language models...

Software 3.0 Revolution: The AI-Driven Programming Paradigm Shift

The software development landscape has undergone a seismic transformation, with AI coding assistants reaching 76% developer adoption and $45 billion in generative AI funding in 2024 alone. Andrej Karpathy's prophetic Software 1.0/2.0 framework now extends to Software 3.0, where natural language programming has become reality and "vibe coding" is democratizing software creation for millions. This...

Test-Time Compute: The Next Frontier in AI Scaling

Major AI labs, including OpenAI, are shifting their focus away from building ever-larger language models (LLMs). Instead, they are exploring "test-time compute", where models receive extra processing time during execution to produce better results. This change stems from the limitations of traditional pre-training methods, which have reached a plateau in performance and are becoming too...

The Trend Towards Smaller Language Models in AI

The landscape of artificial intelligence (AI) is undergoing a notable transformation, shifting from the pursuit of ever-larger language models (LLMs) to the development of smaller, more efficient models. This shift, driven by technological advancements and practical considerations, is redefining how AI systems are built, deployed, and utilized across various sectors. The Shift in AI Model...

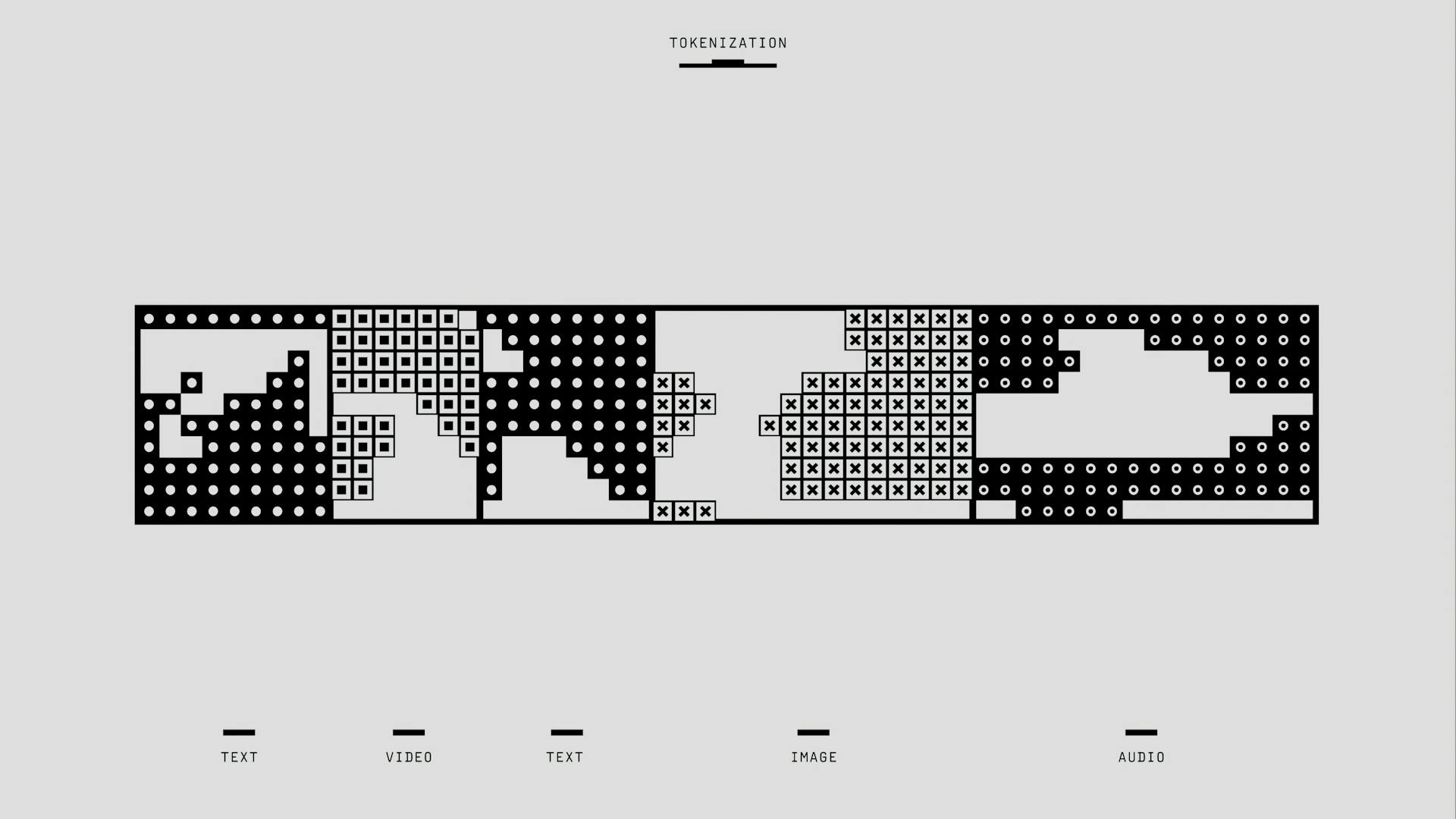

Understanding Tokens in AI

In the realm of Artificial Intelligence (AI), particularly in the context of Large Language Models (LLMs), tokens are the fundamental units of language that enable machines to comprehend and generate human-like text. This article delves into the world of tokens, exploring their definition, history, types, and significance in the AI landscape. What are Tokens? In...

FraudGPT and other malicious AIs are the new frontier of online threats. What can we do?

The internet, a vast and indispensable resource for modern society, has a darker side where malicious activities thrive. From identity theft to sophisticated malware attacks, cyber criminals keep coming up with new scam methods. Widely available generative artificial intelligence (AI) tools have now added a new layer of complexity to the cyber security landscape. Staying...

Embeddings: The Secret Sauce in AI’s Data Cuisine

In the world of artificial intelligence (AI), data is the main ingredient. But just like raw ingredients need preparation before they become a delicious meal, data must be transformed into a palatable format for AI systems, particularly large language models (LLMs), to "digest." This is where embeddings enter the kitchen, serving as the secret sauce...